Introduction to ViT (Vision Transformers): Everything You Need to Know

Table of contents

Vision Transformers (ViTs) apply transformer architecture to image data, replacing traditional convolutional layers. Learn how they process images as sequences, why they're effective for classification tasks, and how they compare to CNNs in performance and scalability.

Below, you can find some key points on Vision Transformers (ViTs).

- What is a Vision Transformer (ViT)?

ViT is a deep learning model that applies the transformer architecture (originally used for NLP) to computer vision tasks.

- How do Vision Transformers work?

They split an input image into patches, encode them as tokens, and process them using a transformer encoder with self-attention.

- Why are Vision Transformers important?

They outperform convolutional neural networks (CNNs) in many computer vision tasks while using substantially fewer computational resources for large-scale datasets.

- What are ViT's advantages over CNNs?

Better at capturing global context and long-range dependencies. More scalable and adaptable to various vision tasks. Requires large-scale pre-training but generalizes well.

- Where are Vision Transformers used?

Image classification, object detection, image segmentation, visual question answering, video processing, and generative modeling.

Transformers, introduced by Vaswani et al. (2017), revolutionized natural language processing (NLP) by leveraging self-attention mechanisms to capture long-range dependencies more effectively than recurrent neural networks (RNNs) and long short-term memory networks (LSTMs).

Their ability to process sequences in parallel and model complex relationships made them the of modern NLP models like BERT and GPT. This success prompted researchers to explore transformers beyond text, adapting them for computer vision tasks traditionally dominated by convolutional neural networks (CNNs).

The Vision Transformer (ViT), proposed by Dosovitskiy et al. (2020) paper, emerged as a groundbreaking adaptation, redefining how images are processed in deep learning. ViT treats images as sequences of fixed-size patches—typically 16x16 pixels—akin to words in a sentence, feeding them into a standard transformer encoder.

This approach enables ViT to model global relationships across an image using self-attention, overcoming a key limitation of CNNs, which rely on local receptive fields and struggle with long-range dependencies.

This article explores the essentials of Vision Transformers, starting with their core architecture—patch embeddings, multi-head self-attention, and transformer encoders. We will compare ViTs to CNNs, emphasizing advantages in global feature learning, reviewing research, and investigating applications in image classification, object detection, and beyond.

1. How Vision Transformers Work

Vision Transformers (ViTs) adapt the transformer architecture—originally designed for natural language processing—to process images. Unlike convolutional neural networks (CNNs), which use convolutional layers to detect local features, ViTs treat an image as a sequence of patches and use self-attention to capture global relationships. Here’s a step-by-step breakdown of the process outlined in the pseudocode:

1.1 Pseudocode

function ViT(image):

patches = split_image_into_patches(image, patch_size)

patch_embeddings = linear_projection(patches)

positional_encodings = get_positional_encodings(patches)

input_sequence = patch_embeddings + positional_encodings

classification_token = initialize_classification_token()

input_sequence = prepend(classification_token, input_sequence)

transformer_output = transformer_encoder(input_sequence)

class_prediction = linear_layer(transformer_output[0]) # Output of classification token

return class_prediction

1.2 Explanation

- Image Patching: The input image is split into fixed-size patches, such as 16x16 pixels. Each patch is flattened into a vector. For example, a 224x224 image with 16x16 patches yields 196 patches (since 224 ÷ 16 = 14, and 14 × 14 = 196). This differs from CNNs, which slide convolutional kernels across the image to extract local patterns.

- Linear Projection (Embedding): Each flattened patch is passed through a linear layer to project it into a fixed-size embedding vector (e.g., 768 dimensions). This step is analogous to creating word embeddings in NLP, transforming raw pixel data into a format suitable for the transformer.

- Positional Encodings: Transformers don’t inherently understand spatial order, so positional encodings are added to the patch embeddings. These encodings provide information about each patch’s location in the original image, ensuring the model can distinguish between patches from different positions.

- Classification Token: A special learnable token (often called [CLS]) is prepended to the sequence of patch embeddings. This token serves as a summary representation of the entire image for classification tasks, aggregating information during processing.

- Transformer Encoder: The full sequence (classification token + patch embeddings with positional encodings) is fed into a transformer encoder. The encoder uses multi-head self-attention to compute relationships between all patches simultaneously, capturing global dependencies across the image—unlike CNNs, which focus on local regions.

- Classification: After processing, the output corresponding to the classification token (the first element of the transformer’s output) is extracted and passed through a linear layer. This produces the final class prediction, such as identifying whether an image contains a cat or a dog.

The table below compares key features of ViTs and CNNs:

While CNNs excel with smaller datasets, ViTs require substantial pre-training on large datasets like JFT-300M to achieve superior performance, such as 85.8% top-1 accuracy on ImageNet (Dosovitskiy et al., 2020). This process highlights ViTs’ ability to scale with large datasets and model global context, making them a powerful alternative to CNNs in computer vision tasks.

💡 Pro Tip: Check out a concise overview of Computer Vision Applications to see how ViTs fit into real-world use cases.

2. Key Components of Vision Transformers

Vision Transformers (ViTs) revolutionize computer vision by adapting the transformer architecture—initially developed for natural language processing—to process images as sequences of patches. Unlike convolutional neural networks (CNNs), which rely on local feature extraction, ViTs leverage self-attention to capture global dependencies across an entire image.

This section dissects the core components that form the technical foundation of ViTs, enabling state-of-the-art performance in image recognition and beyond.

2.1 Image Patches and Embeddings

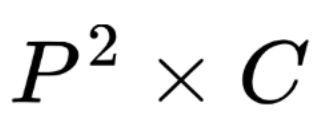

Before a transformer can process an image, the image must be converted into a sequence of vector tokens. ViT does this by splitting the image into fixed-size patches and embedding them. Suppose our input image has height H, width W, and C color channels. We choose a patch size P * P (e.g., 16×16). This yields:

patches (assuming P divides the image dimensions). Each patch is a small image of size P * P * C. We flatten this patch into a vector of length:

Then, we multiply by a learned weight matrix to get a lower-dimensional patch embedding (for example, 768-dimensional). This linear projection plays the role analogous to word embeddings in NLP. Formally, if xi is is the flattened pixel value of patch i, the embedding is:

where We is a learned projection matrix and D is the model’s hidden dimension.

The sequence fed into the Transformer will be:

where xclass is a special learnable [CLS] token embedding (of dimension D) prepended to the patch embeddings. ViT also adds a positional embedding Pi (a learned vector of length D) to each of these tokens, so the actual input is:

This way, the model retains information about each patch’s location in the original image. The patch + position embeddings constitute the input to the Transformer encoder.

In practice, the patch embedding projection can be implemented by a single fully connected layer or even a convolution with stride P (some implementations use a conv layer to extract patches). Also, some hybrid models use a CNN stem to extract feature patches instead of raw image patches, but the standard ViT uses raw image patches.

💡Pro Tip: If you are building on transformer foundations in vision, our Diffusion Transformers article shows how diffusion processes enhance transformers for image generation and representation.

2.2 Self-Attention Mechanism

The self-attention mechanism is fundamental to the success of ViTs, enabling the model to weigh the relevance of each patch relative to all others. In multi-head self-attention, patch embeddings are transformed into query Q, key K, and value V vectors. The attention score is computed as follows:

Where dk is the key vector dimension, the scaling factor square root of dk stabilizes gradients (Vaswani et al., 2017). This operation allows the model to focus on globally relevant patches—e.g., attending to an object’s parts scattered across the image—unlike CNNs’ local receptive fields. Multiple attention heads run parallel, capturing diverse relationships and enhancing feature learning. This global context awareness sets ViTs apart in modeling complex visual patterns.

2.3 Transformer Encoder

The transformer encoder processes the sequence of patch embeddings (augmented with positional encodings) through multiple identical layers, typically 12 or 24 in ViT models. Each layer includes two sub-components:

- Multi-Head Self-Attention: Computes attention across all patches, as described above.

- Feed-Forward Network (FFN): A position-wise fully connected network with a GELU activation, applied independently to each patch.

Layer normalization and residual connections across both sub-blocks, improving training stability and gradient flow. The encoder iteratively refines the embeddings, with each layer integrating information from the entire image.

By the final layer, the output sequence represents a hierarchical understanding of the input, where each embedding reflects both local patch details and global context. This depth and structure enable ViTs to learn sophisticated visual representations.

2.4 Output and Multi-Modal Extensions

ViTs prepend a special classification token ([CLS]) to the patch sequence for classification. After encoding, the output corresponding to this token is passed to a linear layer for predictions:

Where h[CLS] is the final encoder output for the token (Dosovitskiy et al., 2020). This design mirrors NLP’s use of a [CLS] token for tasks like sentiment analysis. Beyond classification, ViTs extend to multi-modal tasks, such as visual question answering (VQA), by integrating text embeddings with image patches.

The transformer then processes both modalities together, enabling cross-modal attention—e.g., linking a question’s keywords to relevant image regions. This flexibility highlights ViTs’ potential in unified vision-language reasoning, broadening their applicability.

2.5 Visualizing Attention Maps

Attention maps extracted from self-attention layers illustrate which patches the model prioritizes. For instance, in classification, these maps often highlight semantically key regions (e.g., objects), enhancing interpretability.

From patch embeddings to encoder processing and output, it shows how ViTs leverage self-attention for global feature learning, distinguishing them from CNNs and driving their success in modern computer vision.

3. Performance Benchmarks & Research Papers

When Vision Transformers were first introduced, a key question was: Can they outperform CNNs on standard vision benchmarks?

Early results showed that the answer is yes with sufficient data and model size. Dosovitskiy et al. (2020) (ViT paper) reported that a ViT model pre-trained on a large private dataset (JFT-300M, containing 300 million images) achieved state-of-the-art results on ImageNet and other vision tasks.

As shown above, a large Vision Transformer (ViT-L/16) pre-trained on a massive dataset roughly matched or surpassed the accuracy of the best convolutional network at the time (EfficientNet-L2), which itself leveraged extra data and distillation.

The ViT achieved this with far fewer training resources – the authors reported ~4× less computing needed than the CNN. In other words, Transformers scaled so well that they could reach higher accuracy more efficiently once given enough data.

Another interesting finding in the ViT paper was that larger ViT models benefited disproportionately from more data. For instance, ViT-Huge (632M parameters) significantly outperformed smaller ViTs when using the full JFT-300M, whereas on smaller datasets, all models would overfit.

This demonstrated a key point: CNNs have strong inductive biases that help in low-data regimes, but transformers excel in the high-data regime, where their capacity can soak up all the information.

Following the original ViT, many research papers have built on its success:

- DeiT (Data-efficient Image Transformers, 2021) showed that with improved training strategies (augmentation, regularization, and knowledge distillation from a CNN), one can train ViTs on ImageNet-1k from scratch and get ~85% top-1 accuracy, closing the gap without external data. This made ViTs much more accessible.

- Swin Transformer (2021) introduced a hierarchical Vision Transformer that operates on local “windows” of patches (like localized attention) and shifts them to mix information – achieving great results on detection and segmentation with better efficiency. We’ll discuss it more shortly.

- CrossViT, CaiT, CvT, and other variants explored different ways of combining convolutional tokens with ViTs or tweaking the architecture for performance gains.

- Scaling to billions of parameters: Recently, researchers have scaled ViTs to extremely large sizes (e.g., a 22-billion parameter ViT by Google Brain. When pre-trained on ever larger datasets or via self-supervised methods, these giant models push accuracy higher (reaching over 90% on ImageNet).

4. Pre-Training and Fine-Tuning ViTs

Vision Transformers (ViTs) often need large-scale pre-training to excel. Unlike ResNets trained from scratch on a million images, ViTs may not converge well with the same amount due to weaker inductive biases.

Large-scale supervised pre-training is a simple solution: train on large labeled sets (e.g., ImageNet-21k or JFT-300M) before fine-tuning on smaller tasks. This leverages ViTs’ strong transfer capabilities, requiring only a few epochs to adapt after replacing the classifier head.

If labeled data is scarce, self-supervised pre-training on unlabeled images is effective. Methods like Masked Autoencoders (MAE) and contrastive learning (DINO, MoCo v3) learn powerful representations, reaching ~80% on ImageNet without labels. These encoders can be fine-tuned or used as frozen backbones.

On smaller datasets like ImageNet-1k, augmentations, and regularizations (RandAugment, Mixup, CutMix, stochastic depth) are crucial. Popular methods like AugReg, including knowledge distillation from a CNN teacher, enable ViTs to train effectively from scratch.

Fine-tuning typically uses lower learning rates and layer-wise decay. Positional embeddings can be interpolated to handle different resolutions. Retaining pre-trained normalization stats or adopting BatchNorm (in certain variants) also helps.

Additionally, the choice of optimizer (e.g., AdamW) and a warmup scheduler can improve stability and convergence, leading to smoother training and better results. Partial fine-tuning of later layers can boost downstream performance.

Pre-trained ViTs are available in libraries like Hugging Face Transformers and timm, offering quick inference or fine-tuning. Because self-attention scales quadratically with patch count, memory usage is high, so gradient checkpointing and mixed precision can reduce resource demands. Smaller ViT variants train faster and may overfit less.

Leverage a pre-trained ViT whenever possible, fine-tuning your data with standard transfer learning. If training from scratch, ensure sufficient data or robust augmentations. A well-trained ViT remains adaptable to diverse tasks, making it a versatile choice in vision applications.

See Lightly in Action

Curate and label data, fine-tune foundation models — all in one platform.

Book a Demo

5. Applications of Vision Transformers (ViTs)

Vision Transformers are being used across a broad range of computer vision tasks. Here, we highlight some key application areas and examples:

- Image Classification: Initially designed for classification, ViTs excel in diverse applications like object recognition (e.g., Google's image recognition) and medical imaging (tumor classification). They outperform CNNs in robustness tests, proving effective on corrupted or perturbed images (ImageNet-C/A).

- Object Detection: ViT power models like DETR use transformers for direct object detection without region proposals. Models like ViTDet, Swin Transformer, and YOLOS use ViT backbones, improving accuracy and scalability in detection benchmarks like COCO.

- Video Processing: ViTs adapt naturally to video data, leading to architectures like TimeSformer (spatial-temporal attention), ViViT, and VideoMAE, achieving strong results in video understanding tasks such as action recognition and object tracking.

- Generative Tasks: ViTs contribute to creative tasks like image generation (VQGAN+Transformer, Diffusion Transformers), image-to-text captioning (ViT-GPT2), and image enhancement tasks such as inpainting and super-resolution, exploiting their global context modeling abilities.

The adaptability of ViTs means a single pre-trained model can be fine-tuned across multiple tasks by changing minimal components, simplifying deployment in multi-task vision systems.

💡 Pro Tip: Are you curious about the Best Computer Vision Tools for ML Engineers in 2025? Explore solutions for data prep, model building, and deployment.

6. Challenges of ViTs

While Vision Transformers are powerful, they come with their challenges and areas of active research:

- Data Intensive: Extensive datasets (e.g., JFT-300M) are required due to limited inductive biases. Techniques like data augmentation, knowledge distillation, and hybrid CNN-ViT models help address data scarcity.

- Computational Cost: Self-attention scales quadratically, limiting efficiency for large images. Models like Swin Transformer introduce windowed attention to mitigate complexity, improving practicality for high-resolution tasks.

- Memory Usage: Large parameter counts and activation storage make training demanding. Solutions include gradient checkpointing, ZeRO optimization, FlashAttention, and smaller distilled ViT variants for edge deployments.

- Translation Equivariance: ViTs inherently lack translation invariance, unlike CNNs—approaches like convolutional stems, relative positional embeddings, and extensive augmentation address this limitation.

7. Getting Started with Vision Transformers (ViT): Code Example

This section will provide a practical code snippet for using a pre-trained Vision Transformer (ViT) model from Hugging Face. The example demonstrates loading a ViT model, preprocessing an image, and predicting its class. The code is written in Python, uses the transformers library, and includes detailed comments to explain each step. We’ll also suggest further resources for deeper exploration.

7.1 Code Snippet

from transformers import AutoImageProcessor, ViTForImageClassification

from PIL import Image

import requests

import torch

# Load the pre-trained ViT model and corresponding image processor

model_name = "google/vit-base-patch16-224"

model = ViTForImageClassification.from_pretrained(model_name)

image_processor = AutoImageProcessor.from_pretrained(model_name)

# Load an image from a URL

url = "https://example.com/sample-image.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# Preprocess the image and prepare it for the model

inputs = image_processor(images=image, return_tensors="pt")

# Perform inference

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

# Determine the predicted class

predicted_class_idx = logits.argmax(-1).item()

predicted_class = model.config.id2label[predicted_class_idx]

print("Predicted Class:", predicted_class)7.2 Additional Notes:

- Ensure the transformers, PIL, and requests libraries are installed in your Python environment. You can install them using:

pip install transformers pillow requests- Replace "https://example.com/sample-image.jpg" with the image URL you wish to classify.

- The pre-trained model "google/vit-base-patch16-224" has been trained on the ImageNet dataset containing 1,000 classes. Ensure that the images you classify are relevant to these classes for accurate predictions.

7.3 Further Resources:

For more detailed information and advanced usage, refer to the Hugging Face documentation:

These resources provide comprehensive guides on fine-tuning ViT models, handling custom datasets, and leveraging the full capabilities of the Transformers library for image classification tasks.

8. Conclusion

Vision Transformers (ViTs) represent a major shift in computer vision, using transformer architectures and self-attention mechanisms to process images as sequences of patches. Unlike traditional CNNs, ViTs rely less on inductive biases but offer greater flexibility, excelling particularly at capturing global relationships within images.

While ViTs initially need larger datasets or extensive pre-training, they achieve comparable or superior accuracy to CNNs with less computational cost during training. Successful ViT deployments often leverage pre-trained models, fine-tuning them using frameworks like Hugging Face.

Their unified architecture supports diverse tasks, including image classification, detection (DETR), segmentation, video understanding, generative modeling, and multi-modal integrations such as CLIP. Ongoing research continues to improve ViTs’ efficiency through hybrid approaches and attention schemes like Swin Transformer, making them increasingly practical.

Practitioners should embrace ViTs, using tools like Lightly to address data limitations and fully leverage their capabilities efficiently. ViTs’ versatility and scalability mark them as a transformative force with a bright future in machine learning.

See Lightly in Action

If your team struggles with high labeling costs, overwhelming computer vision datasets, or inefficient data selection, you’re not alone. Training AI models with the right data is crucial, but manually sorting and labeling massive datasets slows down development and drives up costs.

Lightly streamlines this process with three specialized tools, helping you focus on the most valuable data for your models:

- LightlyEdge: Capture and filter high-value data directly on-device, reducing bandwidth and storage needs for real-time applications.

- LightlyOne: A data curation platform that automates data selection and offers insights to build cleaner, more efficient datasets, cutting labeling costs and improving model accuracy.

- LightlyTrain: Use self-supervised learning to pretrain models with minimal labeled data, enhancing performance across industries.

With Lightly, you can build smarter, more efficient computer vision models without the data bottlenecks. Get started today!

Stay ahead in computer vision

Get exclusive insights, tips, and updates from the Lightly.ai team.

.png)

.png)

.png)